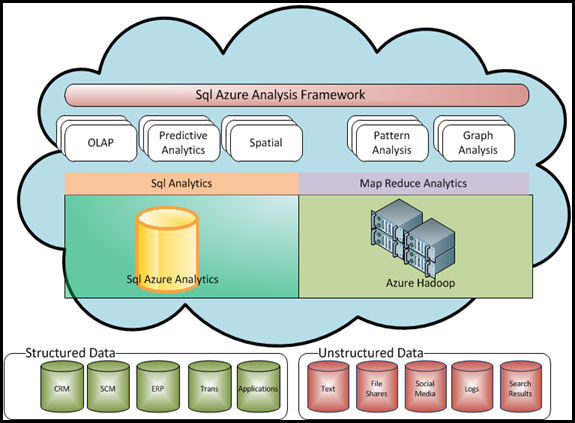

90% of application developed on premise or on cloud are likely to have a database of some nature. The database of choice could be very many ranging from blobs , tables, big data i.e No SQL to SQL Azure.

In the last decade we have seen most database tend to relational in nature its only in last 2 years we have had an adventures of No SQL databases. In the post I go around the areas which needs special consideration for SQL Azure. Below are some the few.

- SQL Azure is not Microsoft SQL Server, on the contrary its a managed version of SQL Server.The SQL database is a multi tenanted system in which many database instances are hosted on a Single SQL Server running on a physical server or node. That is the very reason to expect a very different performance characteristics what one would expect from pure SQL Server

- Every instance of SQL Database in Azure has one primary and 2 secondary replica. SQL Database uses quorum commit in which a commit is deemed successful as soon as primary and secondary replica have completed the commit. One of the many reason why a write will be slower.

- Security in SQL Azure

- Use secure connections via using TDS protocol secure encrypted connecting on port 1433.

- Handle authentication and authorization separately- SQL Database provides security administration to create logins and users in a way similar to SQL Server. Security administration for the database level is almost the same as in SQL Server. However, the server level administration is different because SQL Database is assembled from distinct physical machines. Therefore, SQL Database uses the master database for server level administration in order to manage the users and logins.

- Connection Timeouts -SQL Database offers high availability (HA) out of the box by maintaining three replicas spread out on different nodes in the datacenter and considering a transaction to be complete as soon as two of the three replicas have been updated. In addition, a fault detection mechanism will automatically launch one of the database copies if needed: when a fault is detected, the primary replica is substituted with the second replica. However, this can trigger a short-term configuration modification in the SQL Database management and result in a short connection timeout (up to 30 seconds) to the database.

- Back up Issues - HA is also enforced in the scope of the datacenter itself; there is no data redundancy across geographic locations. This means that any major datacenter fault can cause a permanent loss of data.

- Use of CQRS or EF: With the slow writes and requiring fast reads. CQRS can be considered as it segregates the reads from writes given the uncanny love for EF. A short prototype can help one decide following areas are to be watched out for

- Preventing exceptions resulting from closed connections in the connection pool: First, the EF uses ADO.Net to handle its database connections. Because creating database connections can be time-consuming, a connection pool is used, which can lead to an issue. Specifically, SQL Database and the cloud environment can cause a database connection to be closed for various reasons, such as a network problem or resource shortage. But even though the connection was closed, it still remains in the connection pool, and the EF ObjectContext will try to grab the closed connection from the pool, resulting in an exception. To mitigate this issue, use a retry policy for the entity connection as offered by the Transient Fault Handling Application Block so that multiple attempts can occur in order to accomplish the command.

- Early loading: EF offers early and lazy loading both developers are not aware how queries are fired to the database which can result in performance degradation. Lazy loading may add to the problem as the reads can also be potentially slow if the data is spread across separate tables because multiple round trips will be required to traverse each object. This problem can be eliminated by using eager loading, which enables joining information in separate tables (connected by foreign key) in a single query.

- Avoid LINQ query which uses distribution transactions.

- Handling connection failures: SQL Database is a distributed system which applications access over a network in a Windows Azure datacenter. Connections across this network are subject to failures that can lead to the connections being killed.

- Designing applications to handle connection failures-The first step in handling connection failures is to determine whether the failure is transient. If it is, the application should wait a brief time for the transient problem to be resolved and then retry the operation until it succeeds. Use of Transient Application Block is a must here.

- Throttling: The physical resource on which the SQL Database is hosted is shared among many applications. MSFT does not provide any way to reserve a guaranteed level of resource availability. Instead SQL databases throttles connection to instances that consume too many resources. SQL Database consider resource use as a 10 second interval referred to as throttling sleep interval. Instances that make use of many resources in these intervals may be throttled for one or more of the sleep interval until resource level reaches acceptable levels. Two types of throttling soft and hard depending how severely resource usage limits are exceeded. Figuring out throttling issues is if transient connection failures are high.

- No chatty applications. Use Windows Azure caching techniques to avoid chatty database calls.

- Monitoring Limitations: SQL Database has fewer monitoring options that SQL Server-Various monitoring methods available in SQL Server, such as audit login, running traces and performance counters, are not supported in SQL Database. However, one monitoring option, Dynamic Management Views (DMVs), is supported, although not to the same extent as in SQL Server.

- Backup and Restore-SQL Database provides fault tolerance internally by using triplet copies of each data committed. However, even the strongest database box won’t prevent data corruption due to hardware malfunctions, internal application faults or human errors. Therefore, the DBA for any application needs to be concerned with database backup and restore. We recommend the following practices.

- Scaling out the database-SQL Database instance size is limited and performance is not guaranteed

- Synchronizing Data- Where should the SQL Database instance be located? Windows Azure is a global cloud service available in eight datacenters on three continents. A website can be hosted in multiple Windows Azure datacenters, and Windows Azure Traffic Manager can be configured to allow users to access the closest datacenter. The question therefore arises of where to locate the SQL Database instance to store application data. Which datacenter should it be in?

To manage network access, use the SQL Database service firewall that handles the network access control. You can configure firewall rules that grant or deny access to specific IP or range of IPs. The firewall can be indication of further latency

To protect your data, be sure to back up the SQL Database instance to Windows Azure storage in a different datacenter. To reduce data transfer costs, you can choose a datacenter in the same region.To mitigate the risk of this connection timeout, it is a best practice to implement an application retry policy for reconnecting to the database. To reduce the overall reconnection time, consider a back-off reconnection strategy that increases the amount of time for each connection attempt. There is snapshot recovery of SQL Database as of current, with the acquisition of Stor Simple this can be possible.

Specifically, when there is a failure in either a data node or the SQL Server instance it hosts, SQL Database moves activity off of that node or instance. Each primary replica it hosts is demoted and an associated secondary replica is promoted. As part of this process, connections to the now demoted primary server are killed. However, it can take several seconds for the information about the new primary replica to propagate through SQL Database, so it is essential that applications handle this transient failure appropriately.

In addition SQL Database is a multitenant system in which each data node hosts many instances. Connections to these instances compete for the resources provided by the data node. In times of high load, SQL Database can throttle connections that are consuming a lot of resources. This throttling represents another transient failure that applications need to handle appropriately.

First consider scheduling a backup task every day to create recent restore points.

Second, consider setting the backup target to Azure storage by creating and exporting a BACPAC file from the SQL Database to Windows Azure blob storage; you can either use the Windows Azure portal or an API command (check out sqldacexamples for more information). Be sure to make the link specific. If you do choose to back up to Windows Azure storage, make sure that the storage account is located at a different datacenter (but on the same region to minimize data transfer rates) to prevent loss in the event of a major datacenter failure.

Third, consider using Microsoft SQL Data Sync to sync data between SQL Database instances (copy redundancy) or to sync a SQL Database instance to an on-premises Microsoft SQL Server database (be aware that SQL Data Sync currently does not provide versioning). Finally it’s worth mentioning that if you are planning on a major application upgrade, you should manually back up your databases to prevent an unexpected regression.

SQL Database provides fault tolerance internally by using triplet copies of each data committed. However, even the strongest database box won’t prevent data corruption. Therefore, the DBA for any application needs to be concerned with database backup and restore.

SQL Database is a multi-tenanted system in which the physical resources are shared among many tenants. This resource sharing affects both the maximum instance size supported by SQL Database and the performance characteristics of each instance. Microsoft currently limits the instance size to 150 GB and does not guarantee a specific performance level.

Using sharding to scale out the database with SQL Database Federations

The solution to both the database size problem and the performance problem is to scale the database horizontally into more than one database instance using a technique known as sharding.

SQL Database Federations is the managed sharing feature of SQL Database. A federated database comprises a root database to which all connections are made and one or more federations. Each federation comprises one or more SQL Database instances to which federated data is distributed depending on the value of a federation key that must be present in every federated table. A restriction is that the federation key must be present in each clustered or unique index in the federated tables. The only distribution algorithm currently supported is range, with the federation key restricted to one of a small number of data types.

SQL Database Federations provides explicit support to split a federation instance in two and ensure that the federated data is allocated to the correct database in a transactionally consistent manner. SQL Federations also provides support to merge two instances, but this causes the data in one of the instances to be lost.

An application using a federated database connects to the root database and specifies the USE FEDERATION statement to indicate which instance the connection should be routed to. This provides the benefit of connection pooling on both the client and the server.

SQL Federations provides the ability to scale out a SQL Database application to a far larger aggregate size than can be provided by a single instance. Since each individual instance has the same performance characteristics, SQL Federations allows an application to scale out performance by using many instances.

The solution to both the database size problem and the performance problem is to scale the database horizontally into more than one database instance using a technique known as sharding.

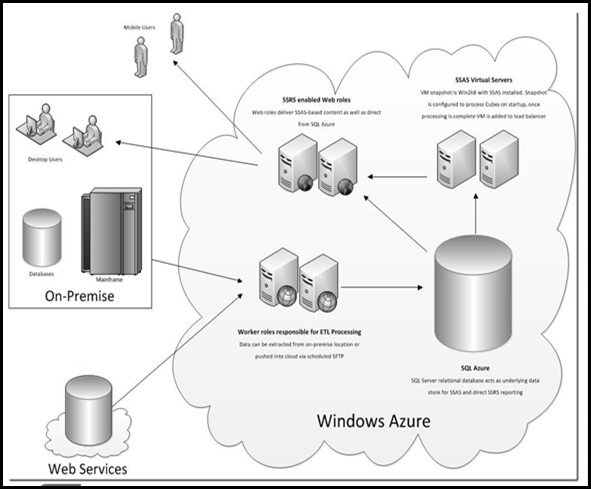

There is an increasing interest in hybrid solutions, in which part of the application remains on-premises and part is migrated to Windows Azure. Again, the problem arises of how to handle data. Should it be stored on premises and a VPN set up to allow cloud services hosted in Windows Azure to access it? Or should it be stored in the cloud?

Using Microsoft SQL Data Sync

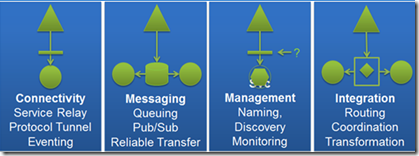

Microsoft SQL Data Sync provides a solution for both of these situations. It can be used to configure bi-directional data synchronization between two SQL Database instances, or between a Microsoft SQL Server database and a SQL Database instance. It uses a hub-and-spoke topology in which the hub must be a SQL Database instance.

Consequently, Microsoft SQL Data Sync can be used together with Windows Azure Traffic Manager to create truly global applications where both the cloud service and the SQL Database instance are local to each datacenter. This minimizes application latency, which improves the user experience.

Similarly, Microsoft SQL Data Sync can be configured to synchronize data between an on-premises SQL Server database and a SQL Database instance. This removes the need to privilege one location over the other, and again improves application performance by keeping the database close to the application.