The Big Data Landscape is for ever changing. While studying what’s there in market and how do I really get a handle at understanding Big Data I come to find that every 2 weeks there is a new name in the landscape. Also the flip side is there names which would just vanish in a short time. It would be good to get a basic understanding of Big Data. In this post I don't necessarily talk about a specific technology I’m just trying to get the understanding right. Big Data if you see is classified around 3 areas

- Batch

- Interactive

- Real-time Tools.

One really has a challenge in terms of how to understand Big Data, what is base classification of the Big Data space. This is what the landscape looks like as of early Jan 2013 this year, this has changed.

Broadly this gives an idea of all the different things that are in the big data landscape. The only way one can tend to understand the Big Data space is the following high level concepts.

Batch Processing

The data provided needs to processed. Large amounts of data needs to be processed fairly quickly. Typically most seen in the world of Hadoop or HDInsight on Windows Azure. Essentially what does it entail.

- It is has data spread over n number disk on each of the nodes.Has distributed cluster to process that data.

- Volume of the data is generally TB to PB.

- The primary programming model used is MapReduce , essentially what we are doing is Mapping out operations to each of the machine and post that there is aggregation using the reduce function.

The MapReduce function have to be typically written by a developer. The original project was started by Google Map/Reduce.Currently 2 open source projects – Hadoop & Spark. Spark provides primitives for in-memory cluster computing: your job can load data into memory and query it repeatedly much more quickly than with disk-based systems like Hadoop MapReduce.

Interactive Analysis

The large set of data needs to analysed interactively. There are 2 technologies generally been employed her is column based databases which has sequentially indexed data. It has capability of doing table scans pretty quickly. The second is to have as much data in the memory cache. This is pure play interactive platform which are in a position to analyse large sets of data at very low latency. One such good example is Palantir’s Project Horizon majorly building interactive analysis of big data for US government. Below is a cool video which explains it more depth. What seems be important in these kinds of implementation

- Data is never duplicated.

- Compact in memory representation – The in memory data representation needs to real small on the memory footprint unto 16GB. Compression must be lightweight - Dictionary & prefix based compression , localized block based scheme are effective.

- Analyse functionality here is not business specific “its more like analyse any kind of data”

- Partitioning of processing: Shared nothing/Sharded architecture .

- Partition Id for Objects would be a good idea, but sending half billion Partition Id from the client to the serve will not be a good idea. Another way is to have has Partition object ID’s in subset using the same hash, use the hash for query.

- Other option of interactive analysis is Drill, Shark, Impala & Hbase. Originally started with Google Dremel.

<Video on Palantir’s Interactive Analysis Platform – Project Horizon>

Stream Processing

Hadoop’s batch-oriented processing is sufficient for many use cases, especially where the frequency of data reporting doesn’t need to be up-to-the-minute. However, batch processing isn’t always adequate, particularly when serving online needs such as mobile and web clients, or markets with real-time changing conditions such as finance and advertising.”

The real-time use case is an obvious one. If you need to respond or be warned in real-time or near real-time, for example, security breaches or a service impacting event on a VoIP or video call, the high initial latency of batch oriented data stores such as Hadoop is not sufficient.

Moreover the data is not valuable without analysis. In a typical real-time scenario data is fed from multiple sources and analyse this data on fly is seen as a business requirement in multiple industry Segments.

Streaming Big Data analytics needs to address two areas. First, the obvious use case, monitoring across all input data streams for business exceptions in real-time. This is a given. But perhaps more importantly, much of the data held in Big Data repositories is of little or no business value, and will never end up in a management report. Sensor networks, IP telecommunications networks, even data center log file processing – all examples where a vast amount of ‘business as usual’ data is generated. It’s therefore important to understand what’s being stored, and only persist what’s important (which admittedly, in some cases, may be everything). For many applications, streaming data can be filtered and aggregated prior to storing, significantly reducing the Big Data burden, and significantly enhancing the business value of the stored data.

Stream Processing was started by a project called Storm called Twitter the need out there was to able store the data like images, feeds and second was to aggregation very quickly.

Storm, Apache & Kafka are some of the Stream Processing Platform.

Quick Comparison Sheet

The No SQL Paradigm

The Relational Database built by Codd came into existence in the 70’s majored by IBM with a multiple query language variants. Post that one we saw.

In 80’s we saw a lot of applications been built , the need for faster speed was important. Ingres.They came up with the idea of have to save some part of the big database in another small area what they termed as Index which improved performance.

Then come along the web which kind change the dynamics on the database which is were the concept of No Sql came from. No Sql means Not Only SQL.

The focus on any relational database design is on “how can we store” without duplicating the same. There is very little focus given on how do we use this. Given that in the current times the focus is more “How do I Use this data” obliviously with the best performance and scalability at center of the conversation. NoSQL ideally is focused on “How Do I Use This Data” for example is the data going to use for job queue, shopping cart, cms or multiple other usage scenarios. Below is JSON of a shopping cart item, which consist of id, user id, line items all in one place and that’s how it actually gets used.

{

id : 3,

user_id : 25,

line_items: [

{ sku : '123', price: 1000,

name : 'Nunemaker Autograph'},

{ sku : '124', price: 1000,

name : 'Banker Autograph'},

],

shipping_address: {

street : '123 Some St.',

city : 'South Bend',

state : 'IN',

zip : '11216'

},

subtotal : 2000,

tax : 140,

total : 2140

}

Its best to keep all the data which kind of related in one place because that’s the way its going to be used. In the relational world the emphasis is around how are we going to store it and separate it VS in the No SQL world its kept altogether and usage becomes far simplified.

NoSQL is about data OR Pro Data and its about How you Use the Data.The debate of SQL Vs. NoSQL is never around Scalability, but when get to a point of Scaling its far more easier to scale in the NoSQL world.

No SQL is analogous to OLTP

In coming section I will concentrate on some of important Interactive Analysis & Streaming platform.

HBase –Interactive Platform

HBase is used by Facebook. All the messages that one sends to Facebook are actually done through HBase. Its all about high volume super fast INSERTS. Its also good at volatile READS. HBase was designed to a highly transactional system owing to the fact the data is pretty much in the memory.

HBase is an in memory , column store database. Apparently this has to be understood properly especially the column store is not the same as RDBMS column. A very efficient INSERT or writes engine. Also the definition of database for HBase is different.

HBase relies on Hadoop for its persistent storage , that kind pretty explain the rest of the figure.

Zookeeper is a distributed coordinating service this keeps tracks all the HRegion Server and make sure whatever is written into the memory os also written in the HDFS. By definition the way HDFS works is that if you lose a node you already 3 replica’s.

Cassandra - Interactive Platform

Cassandra is very similar to HBase in terms of functionality. Its got a SQL like query language they call it the CQL. Both HBase and Cassandra are very good at writes and super fast and both are in memory.

Facebook initially started out of Cassandra that moved on to HBase. The real reason of moving to HBase is not very clear.

Drill

Apache Incubation Project inspired by Dremel. Designed to scale to 10k servers , query PB’s in minutes. Traditional PetaByte Map Reduce will take hours today , Drill apparently takes minutes and some cases seconds. This is an Open Source reimplementation of Dremel.

A little background on Dremel

Some Google Terminologies which are associated with Drill- Big Data & Big Query- Big Data in the industry by definition is about 500 million rows and above. Big Data has a limitation on size of the column at 64kb.

Big Data at Google – What does it mean from a number per say

- 60 hours of YouTube video uploaded per minute

- 100 million gigabytes search Index / Analysis of 2010

- 425 million Gmail users.

Looking at the size of the data a relational database is an obvious no, that leaves us with an option of doing a full table scan, which can be expensive in the relational world, that’s where Dremel was born.

Big Query is the externalization of this technology.

What does a Big Query really look like ex: Finding top installed markets apps?

SELECT top(appId 20) AS app, count( * ) AS count FROM installing.2012

ORDER BY count DESC

Result in last than 20 seconds.

Where can we use Big Query?

- Game and social media analytics

- Infrastructure monitoring

- Advertising campaign optimization

- Sensor data analysis.

Apache Drill is Google Incubation project around Interactive Analysis of Large Datasets. Map Reduce is a batch mode tool and there is a latency associated with the same. There are cases where one would like to real-time data faster some of the scenario are

- Adhoc –analysis with interactive tools

- Real-time dashboards

- Event/trend detection and analysis

- Network intrusion

- Fraud

- Failures

The key point in Dremel is it uses Nested Data Model.

Apache Drill its system designed to support Nested Data.

- Flat record are the simplest case of nested data that is root only

- Support Schema(Protocol Buffers, Apache Avro) and Schema less (JSON, BSON) formats.

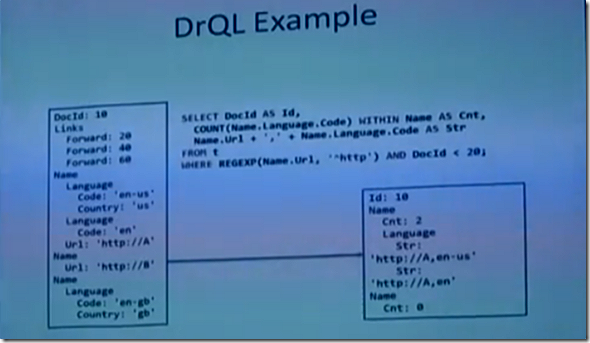

What is Nested Query Languages supported by Drill?

- DRQL

- SQL like query language for nested data

- Compatible with Google BigQuery/Dremel

- Designed to support efficient column based processing

- Mongo Query Language

- Other language/ programming models can plug.

The data model is a nested Data Model for Document split across Basic Document Data and URL entries. The query is very SQL like.

How does Data Flow work with Drill?

Data loaded into Hadoop Cluster by one of many mechanism i.e Hive, HDFS command line, Map/Reduce or NFS interface. The data in Hadoop is stored as row fashion. The Drill Load is responsible for converting the row based data into columnar manner.

Alternatively for the first time Row Based query is allowed which in turn helps in creating a columnar copy of the same.

What does the Query Execution of Drill look like at high level?

At a very high level the Query Execution involves the following step.

- The Driver submits the Query(text) for the Parser. The Parser does a parsing builds the abstract syntax tree and hands it over to the Compiler.

- The Compiler does the optimization and builds the execution plan

- The Execution Engine is responsible for scheduling this Storage which can on any server

Typical Sql Query Components support? What are they

Query Components

- SELECT

Key logical operators

- Scan

- Filter

- Aggregate

- (Join)

What is so unique about the SCAN logical operator?

One of architecture goal for Drill has been to support multiple formats that is achieved by having a SCAN operator for each format. So for example the query consists of a Where which has JSON data the query would involve

SELECT Json(data URI)

Field and predicates are pushed down into the scan operator.

What’s actually involved in the Execution Engine?

Drill Engine Execution has 2 layers

- Operator Layer which is serialization layer- This where individual records are processed, for example doing a count on a table there local table scan done followed by local aggregation and at the global aggregation a sum is done.

- Execution Layer is not serialization aware, all it does is transfer blobs across nodes and cluster and this layer is responsible for communication between nodes, dependencies(what has to finish before) & fault tolerant.

A Complete Video on Drill can be found here

Impala – Interactive Analysis

Impala kind of solve the same problem as Drill i.e moving beyond Map/Reduce , batch processing with low latency problems. Very similar Columnar Storage and the complete works as Drill. So for the sake of simplicities will just get to the points of differentiations here.

However, it would be unfair of me to compare the two in detail in terms of maturity and functionality at the present time. As of October 29th, 2012, the Drill source code repository at [1] has code for a query parser and a plan parser which includes a reference plan evaluator which can perform scans against JSON-formatted data in flat files. Impala's tree at [2] includes a distributed query execution engine with support for cancellation, failure-detection, data modification via INSERT, integration with HDFS and HBase, JIT-compiled execution fragments via LLVM and a bunch of other stuff.

Impala is completely dependent on Hadoop. It utilizes Hive QL.Impala is progressing to becoming an MPP (Massively Parallel Processing) Architecture.

The query processing is similar to Drill.

The high drive from MSFT towards Impala is pretty evident as they want a good Interactive tool in this arena without that they would be doomed.

Storm- Stream Analysis

Storm is a free and open source distributed real-time computation system. Storm makes it easy to reliably process unbounded streams of data, doing for real-time processing what Hadoop did for batch processing. Storm is simple, can be used with any programming language, and is a lot of fun to use!

Storm has many use cases: real-time analytics, online machine learning, continuous computation, distributed RPC, ETL, and more. Storm is fast: a benchmark clocked it at over a million tuples processed per second per node. It is scalable, fault-tolerant, guarantees your data will be processed, and is easy to set up and operate.

Storm integrates with the queuing and database technologies you already use. A Storm topology consumes streams of data and processes those streams in arbitrarily complex ways, repartitioning the streams between each stage of the computation however needed. Read more in the tutorial.

Closing Notes……………

There is a lot happening in this space. The need for interactive analysis and stream processing is a must for any big data implementation.

Google has been pretty much the innovator of Map/Reduce way back in 2003, then Dremel They are pretty much leading this space with open source world really quick to capitalize on the same and bringing them to market. On the contrary the Rest of the Google world (Microsoft, Oracle, IBM…) have a long way to sprint to keep up. The easiest way from them to accept an open source implementation and roll it out quickly example HDInsight.

Its very clear that MSFT is pushing Impala in the Interactive space alongside HDInsight.

KQFM5PRP7JD8

2 comments:

will it be possible for you to explain what would be tools that analytics companies like BloomReach uses? I mean some case studies ripped wide-open.

I would be having another post coming on financial services sector and big data adoption that will cover what you asked.

Thanks

Ajay

Post a Comment